EyeNav

Real-time eye tracking and voice-controlled web browsing with automated test script generation

Team Members

Table of Contents

Purpose

EyeNav is a modular web interaction framework. It fuses real-time eye-tracking (with Tobii-Pro SDK) and on-device natural-language processing (using Vosk) within a Chrome extension and Python backend to deliver:

- Accessible input for users with motor impairments

- Hands-free browser control for developers and general users

- Automated test generation via record-and-replay (Gherkin + WebdriverIO)

By orchestrating gaze-driven pointer control, voice-command parsing, and concurrent logging threads, EyeNav enables both interactive accessibility and behavior-driven development in web environments.

Video

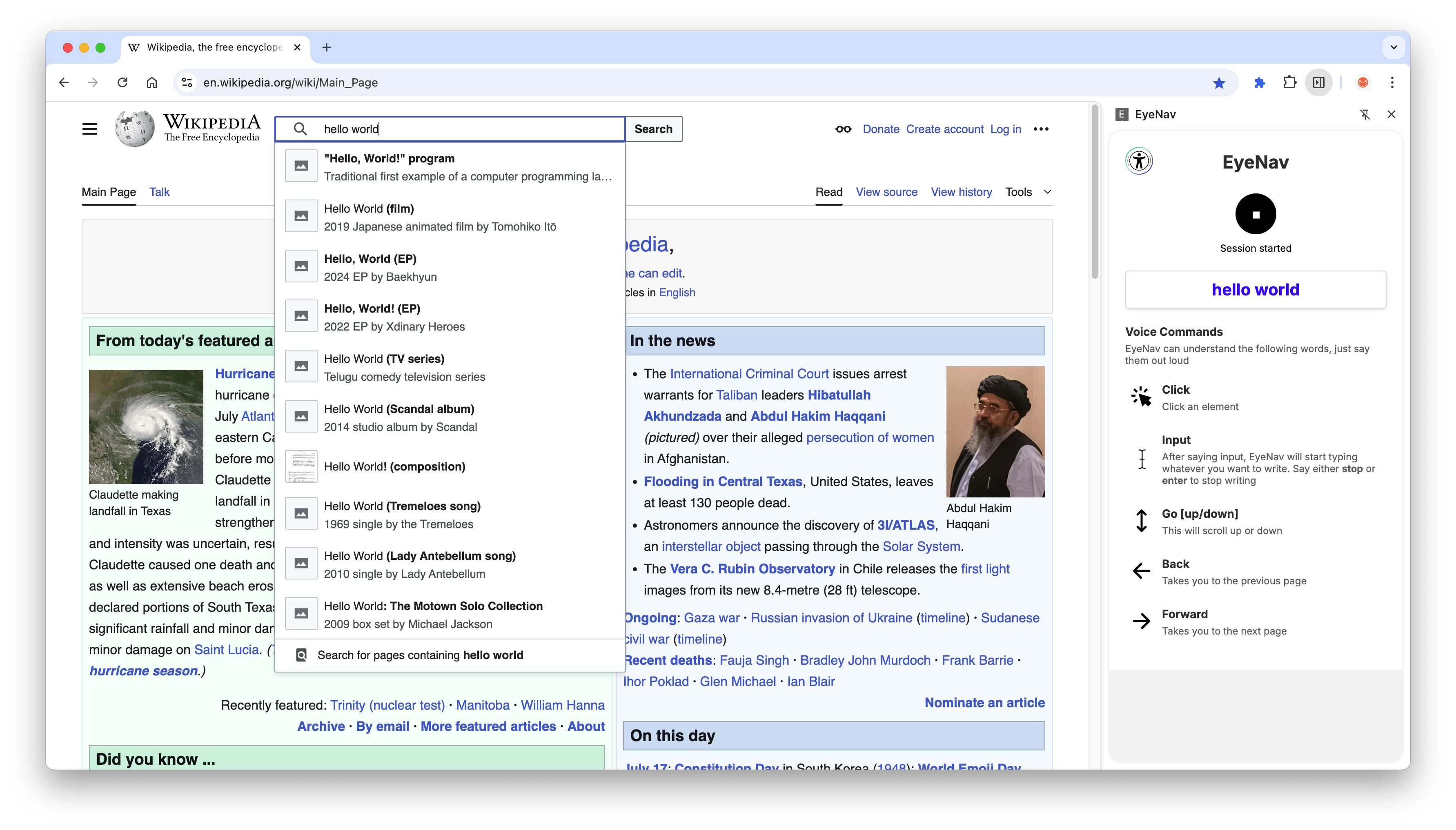

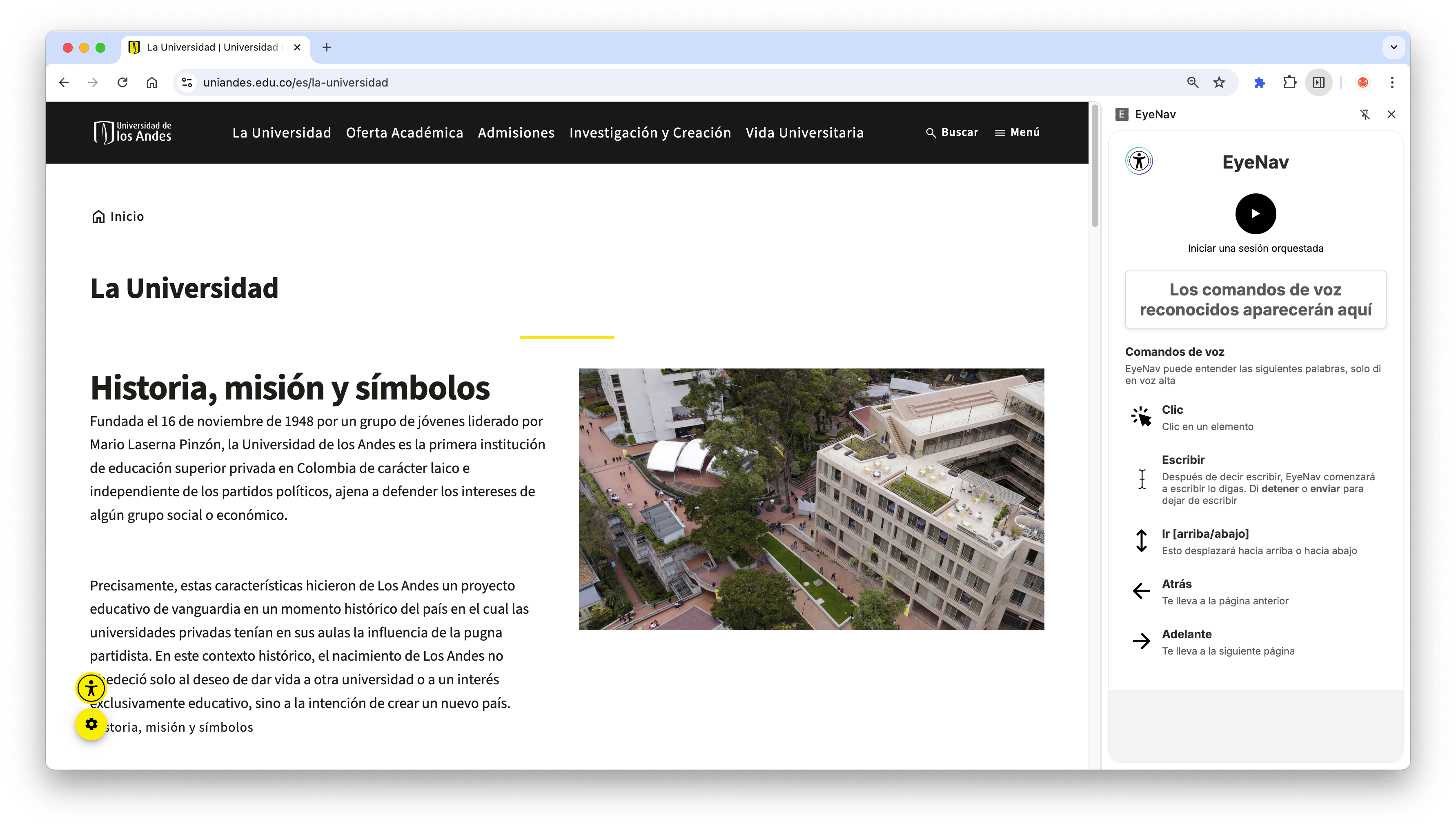

Screenshots

Hardware and Software Requirements

- A Tobii eyetracker

- A microphone

- Google Chrome (v114+)

- Python

Summary

EyeNav implements the following core features:

- Gaze-Driven Pointer Control.

Maps eye gaze to cursor movements using the Tobii Pro Nano and the

tobii-researchSDK. - NLP Command Parsing

Transcribes and interprets voice commands (click, input, scroll, navigate) with Vosk running locally.

- Record-and-Replay Test Generation.

Logs interactions in Gherkin syntax and replays them via Kraken & WebdriverIO.

- Modularity

Enable or disable any of the three subsystems (gaze, voice, test logger) independently.

- Internationalization

Supports English and Spanish out of the box; additional languages can be downloaded and locale translation.

Installation

-

Clone the Repository

git clone https://github.com/TheSoftwareDesignLab/EyeNav.git cd EyeNav -

Backend Setup

Frtom the

backend/folderpython3 -m venv venv source venv/bin/activate pip install -r requirements.txt -

Chrome Extension

- Open

chrome://extensions/in Chrome (v114+) - Enable Developer mode

- Click Load unpacked and select

extension/

- Open

Usage

-

Start Backend

python backend/main.py -

Load Web Page & Extension

- Navigate to any web page

- Click the EyeNav extension icon to open the side panel

-

Initiate Session

- Click Start in the side panel

- Experiment with gaze, voice commands, or both

-

Generate Tests

- Interactions are logged automatically

- Generated Gherkin scripts appear in

tests/directory - Replay with Kraken

Configuration

Voice Model & Language

- Default support for English (

en) and Spanish (es) models is provided. To download more models, change the preferred language on Chrome. - To check for supported languages, see the official vosk documentation.

- To add map a new language to the commands, manual translation is required. Add locale code to

commands.json, with the specified language code.

Logging & Selectors

- Locator priority for click events:

hrefidclassName- Computed

xPath

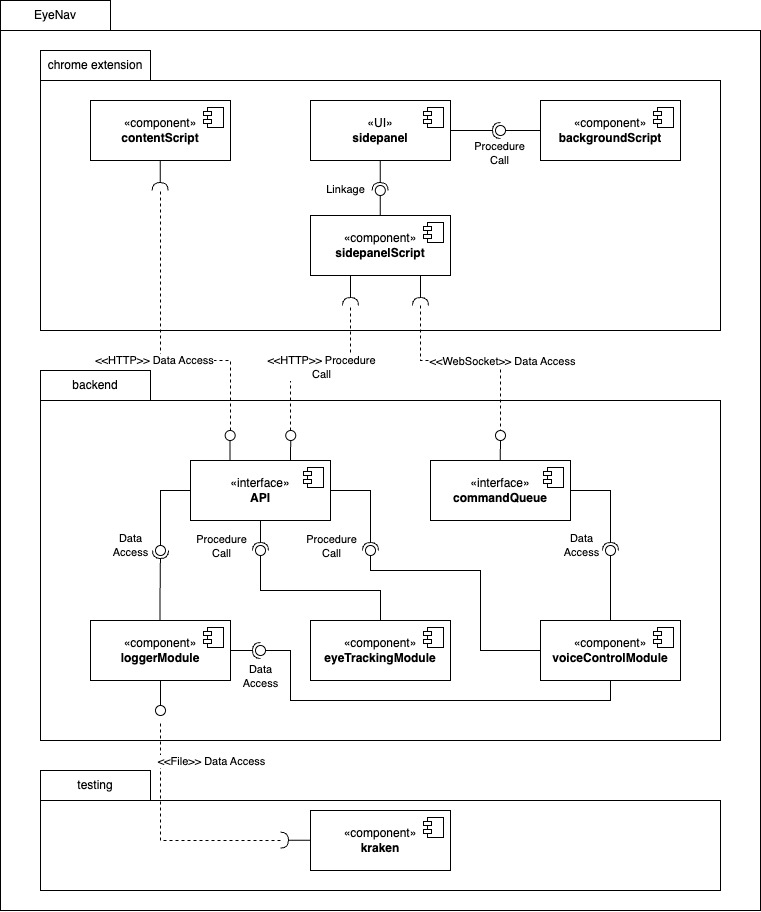

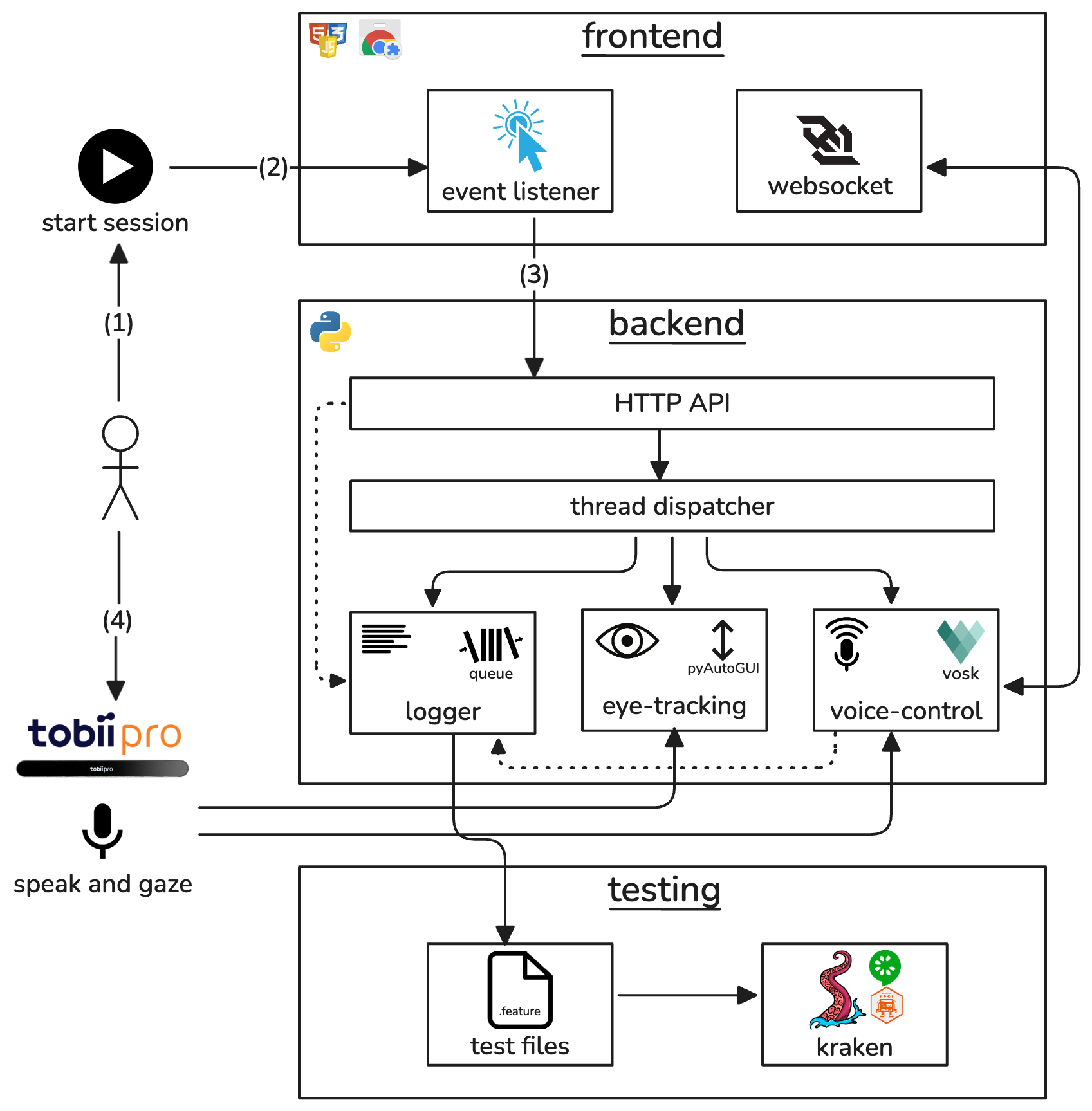

Architecture

Components

- Frontend (Chrome Extension): UI panel + event listener + WebSocket client

- Backend Service: HTTP API + thread dispatcher spawning:

- Eye-Tracking Thread (Tobii SDK)

- Voice Thread (Vosk)

- Logging Thread (Gherkin generator)

- Test Runner: Kraken/WebdriverIO integration for replay

Context

Use Cases

- Accessible Browsing

Hands-free navigation for users with disabilities.

- Automated Testing (A-TDD)

Generate and replay acceptance tests for regression.

- Accessibility Evaluation

Collect interaction data for consultants and researchers.

- Intelligent Agents

[TBD] Enable bots to navigate and test web UIs via gaze & speech.